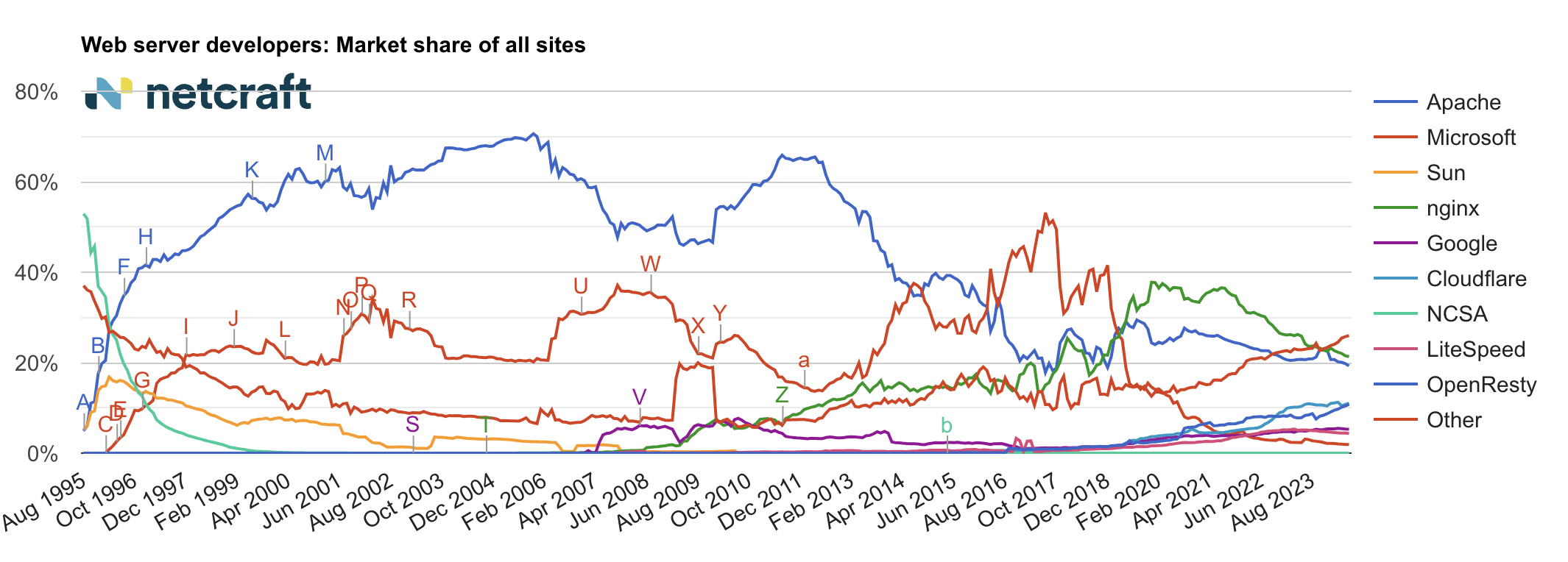

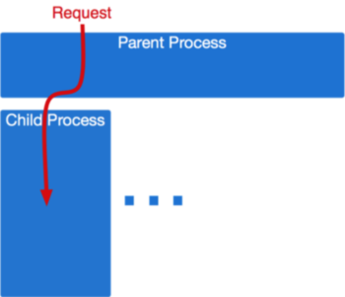

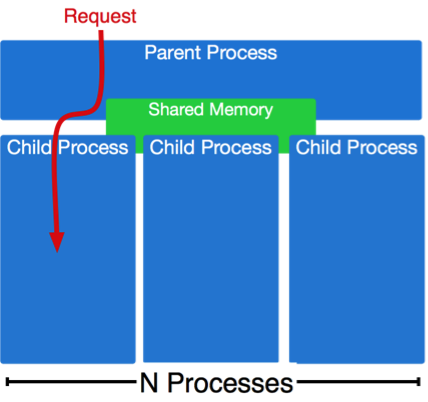

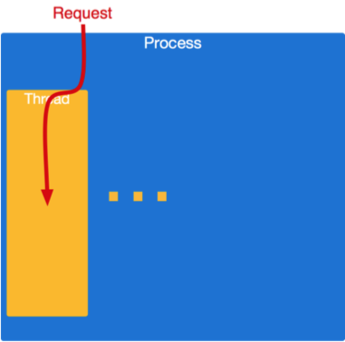

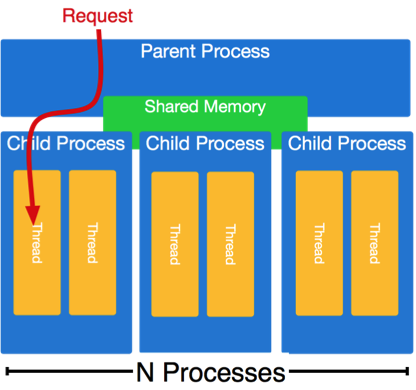

class: center, middle # HTTP Servers ## CS291A: Scalable Internet Services --- # End to End HTTP By now, we all should have a reasonable understanding of the HTTP. -- Many browsers and clients exist that are able to: * Open a TCP socket * Send an HTTP request * Have the request processed * Receive the data in a response * Reuse the socket for multiple requests -- The software systems that handle the requests are generally divided into two parts: * HTTP Servers (these slides) * Application Servers (the next set of slides) --- # HTTP Servers  Latest: https://www.netcraft.com/blog/june-2024-web-server-survey/ -- 1. Other - 26% 2. nginx - 21% 3. Apache - 19% 4. Open Resty - 11% 5. Cloudflare - 11% 6. Google - 5% 7. Microsoft - 2% --- # HTTP Server Responsibilities * Parse HTTP requests and craft HTTP responses _very_ fast * Dispatch to the appropriate handler and return response * Be stable and secure (lots of string parsing) * Provide clean abstraction for application servers > How do HTTP servers provide concurrency? --- class: center inverse middle # Server Architectures --- # TCP Server Concurrency Approaches * Sequential (no concurrency) * Process per request * Process pool * Thread per request * Process/thread worker pool * Event-driven For select examples see: [https://gist.github.com/bboe/6a7b03fcd110c4c6bbe5ec412f523428](https://gist.github.com/bboe/6a7b03fcd110c4c6bbe5ec412f523428) --- # Sequential Servers ```python bind() to port 80 and listen() loop forever accept() a socket connection while we can read from the socket read() a request process that request write() its response close() the socket connection ``` -- > What happens if another request comes in while we're within the loop? --- # Sequential Server Issues If a sequential HTTP server does not process the request quickly, other clients end up waiting or dropping their connections (**head of line blocking**). We are building complex web applications not simple web sites. As a result: -- * The requests are usually more complicated than serving a file from disk. -- * It is common to have a web request doing a significant amount of computation and business logic. -- * It is common to have a web request result in connections to multiple external services, e.g., databases, and caching stores. -- * These requests can be anything: lightweight or heavyweight, IO intensive or CPU intensive. -- We can solve these problems if the thread of control that processes the request is separate from the thread that `listen()`s and `accept()`s new connections. --- # Process per Request Servers Handle each request as a subprocess: .left-column40[] .right-column60[ ```python bind() to port 80 and listen() loop forever accept() a socket connection * if fork() == 0 # child process while we can read from the socket read() a request process that request write() its response close() the socket connection * exit() ``` ] --- # Process per Request Servers ## Strengths * Simple * Provides significant isolation between requests -- ## Weaknesses * How much memory is required? * What happens as more parallel requests come in? * How efficient is it to start a process on each request? * How much setup and teardown work is necessary? --- # Process Pool Servers .left-column[] .right-column[ Instead of spawning a process for each request, create a pool of N processes at start-up and have them handle incoming requests when available. The children processes `accept()` the incoming connections and use shared memory to coordinate. The parent process watches the load on its children and can adjust the pool size as needed. ] --- # Process Pool Servers ## Strengths * Provides isolation between concurrent requests * Children can die after _M_ requests to minimize memory leakage issues * Process setup and teardown costs are minimized * More predictable behavior under high load -- ## Weaknesses * More complex than process per request * Many processes can still mean a large amount of memory consumption This HTTP server architecture is provided by the Apache 2.x MPM "Prefork" module. --- # Thread per Request Servers Why use multiple processes at all? Instead we can use a single process and spawn new threads for each request. .left-column40[] .right-column60[ ```python bind() to port 80 and listen() loop forever accept() a socket connection * pthread_create() while we can read from the socket read() a request process that request write() its response close() the socket connection pthread_exit() ``` ] --- # Thread per Request Servers ## Strengths * Relatively simple * Reduced memory footprint compared to multi-processed -- ## Weaknesses * Worker (request handling code) must be thread-safe * Setup and teardown needs to occur for each thread (or shared data needs to be thread-safe) * What about memory leaks? --- # Process/Thread Worker Pool Servers .left-column[] .right-column[ Combination of the two techniques. Parent process spawns worker processes, each with many threads. Parent maintains process pool. Processes coordinate through shared memory to `accept()` requests. Fixed threads per request, scaling is done at the process level. ] --- # Process/Thread Worker Pool Servers ## Strengths * Faults isolated between processes, but not threads * Threads reduce memory footprint * Tunable level of isolation * Controlling the number of processes and threads allows for predictable behavior under load -- ## Weaknesses * Requires thread-safe code * Uses more memory than an all-thread based approach This HTTP server architecture is provided by the Apache 2.x MPM "Worker" module. --- class: center inverse middle # Digression: C10K Problem --- # C10K Problem > Given a 1 GHz machine with 2GB of RAM, and a gigabit Ethernet card, can we > support 10,000 simultaneous connections? -- ## 20,000 clients means each client gets: * 50 KHz of CPU * 100 KB of RAM * 50 Kb/second of network -- > It shouldn't take any more horsepower than that to take four kilobytes from > the disk and send them to the network once a second for each of twenty thousand > clients. -- Originally posed in 1999 by Dan Kegel. Source: [http://www.kegel.com/c10k.html](http://www.kegel.com/c10k.html) 2015: [C10M](https://migratorydata.com/2015/05/20/how-migratorydata-solved-the-c10m-problem-10-million-concurrent-connections-on-a-single-commodity-server/) --- class: center middle # What makes managing concurrent connections difficult? --- # For each client the server is... * Reading from the network socket * Parsing its request * Opening a file on disk * Reading the file into memory * Writing the memory to network --- # For each client the server is... __blocking on I/O__ * Reading from the network socket (__blocking__) * Parsing its request * Opening a file on disk (__blocking__) * Reading the file into memory (__blocking__) * Writing the memory to network (__blocking__) --- # Waiting on I/O Every time a process/thread is waiting on I/O it is not runnable, and it is not cost-free: * Each process/thread is considered every time the scheduler makes a decision * Memory is occupied by the process, and its last load may have evicted other processes' memory from the cache Massive concurrency slows down all processes/threads. --- # How can we not wait on I/O? Blocking system calls cause this problem. > Can we accomplish our desired tasks without blocking? -- ## Yes! Using Asynchronous I/O Use a non-blocking version of I/O system calls in combination with: * `select()`: Provided a list of file descriptors, block only until at least one is ready for I/O (only usable up to file descriptor number 1023 on Linux). * `epoll_*()`: (Linux) Register to listen for events on file descriptors. Again block only until at least one of the registered descriptors is ready for I/O --- # Select example Assume we have a list of sockets called fd_list. ```python loop forever: select(fd_list, ...) // block until something has I/O to handle for fd in fd_list if fd is ready for IO handle_io(fd) else do nothing ``` -- ## handle_io * can include socket acceptance * shouldn't make any blocking calls (use non-blocking variants) * should avoid excessive computation * Use a separate thread, process, or worker pool for such purposes --- # Event Driven Systems Systems that operate in such a manner are called event driven systems. Often such systems can accomplish everything using only a single process and thread, of course more may be needed for CPU-bound segments. Well used examples: * NGINX * lighttpd * netty (java) * node.js (JavaScript) * eventmachine, async-http, falcon (ruby) * twisted (python) --- # Event Driven Servers ## Strengths * High performance under high load * Predictable performance under high load * No need to be thread-proof (unless specifically adding thread-concurrency) -- ## Weaknesses * Poor isolation * What happens if a bug causes an infinite loop? * Extensions are hard to implement since they cannot use blocking syscalls * Very complex --- # Event Machine (Ruby) Example Event driven code is dominated by callbacks: ```ruby EM.run { test = EM::HttpRequest.new('http://google.com/').get test.errback { puts "Google is down! terminate?" } test.callback { search = EM::HttpRequest.new('http://google.com/search?q=em').get search.callback { # callback nesting, ad infinitum } search.errback { # error-handling code } } } ``` --- # Callback Hell .center[] With all these callbacks, event-drived programming _can_ very easily become complicated. --- # Server Architectures Review .left-column[ * Sequential (single process and thread) * Easy * No concurrency * Process per request * Greatest isolation * Largest memory footprint * Thread per request * Less isolation * Smaller memory footprint ] .right-column[ * Process/thread worker pool * Tunable compromise between processes and threads * Event-driven * Great performance under high load * Difficult to extend * Reduced isolation ]