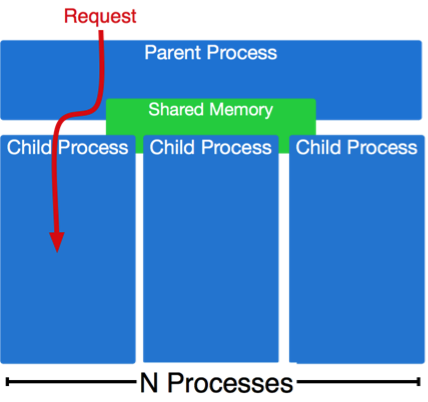

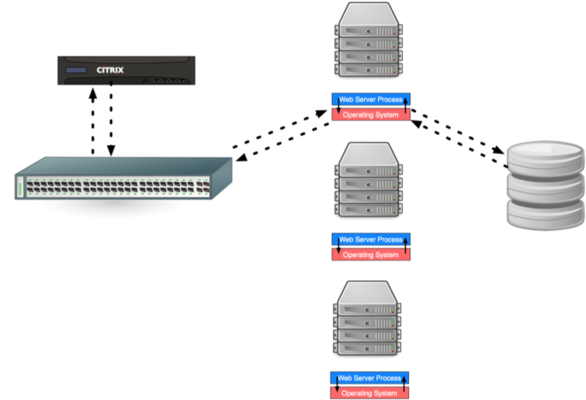

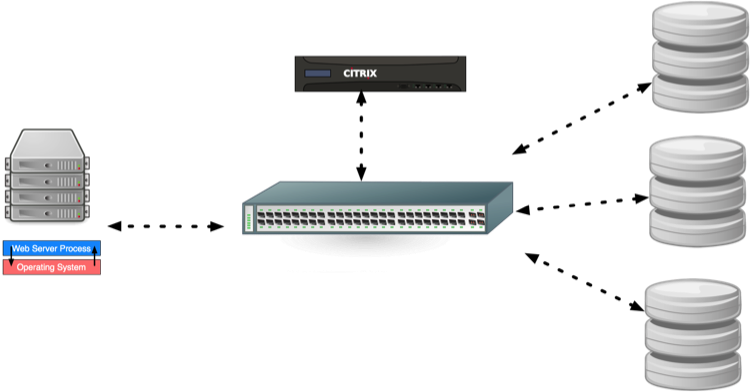

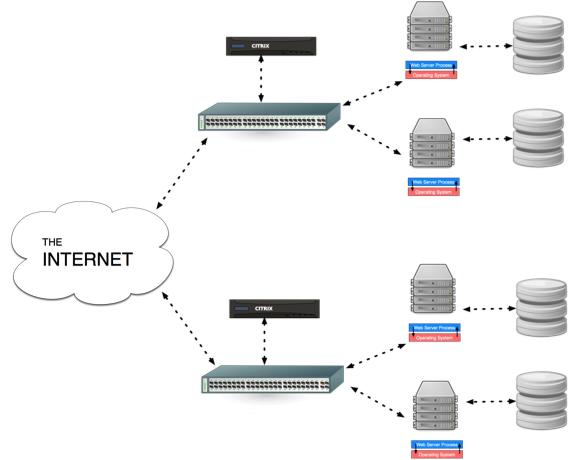

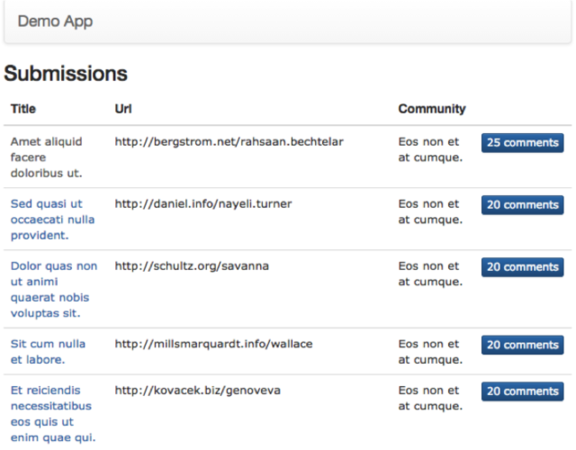

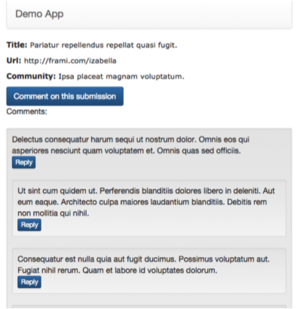

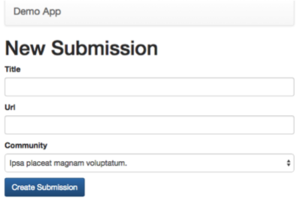

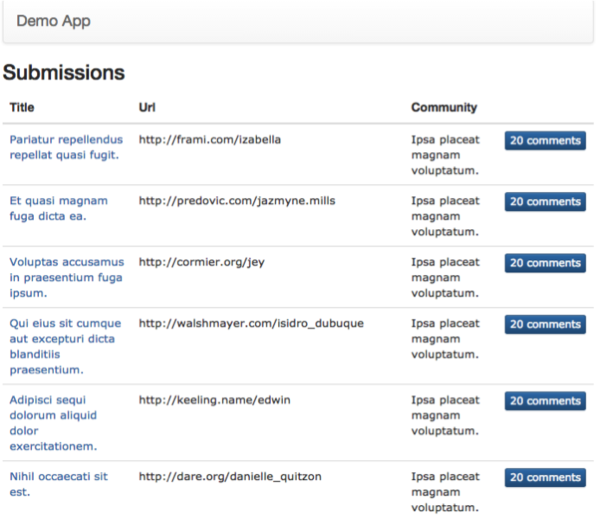

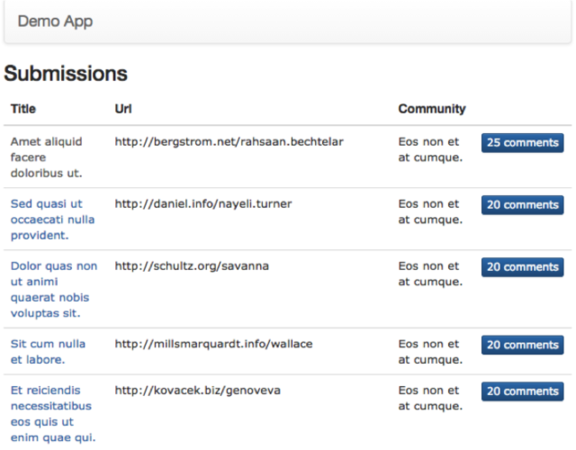

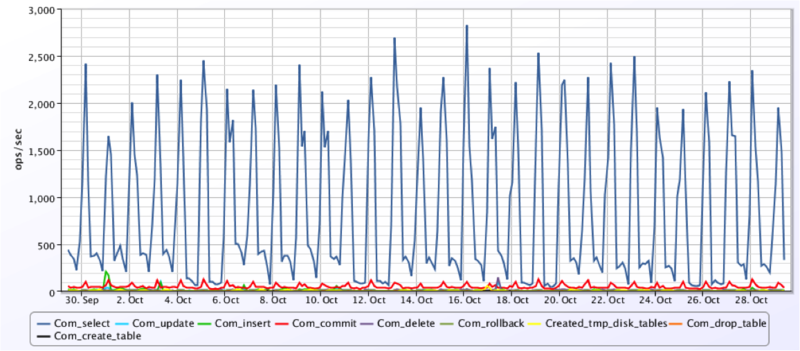

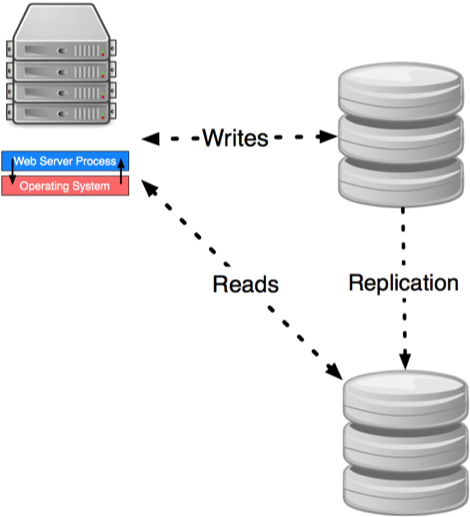

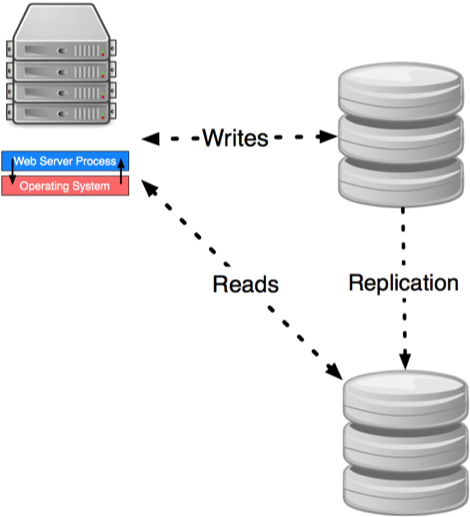

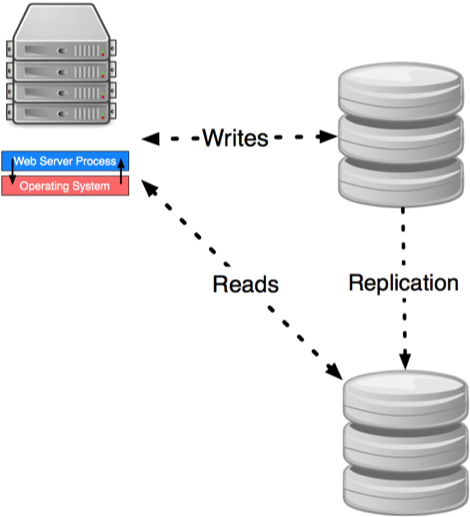

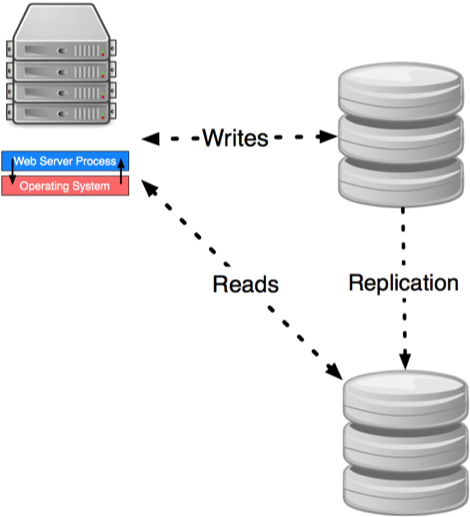

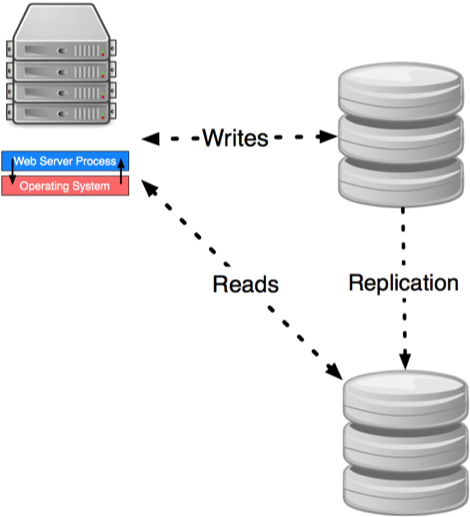

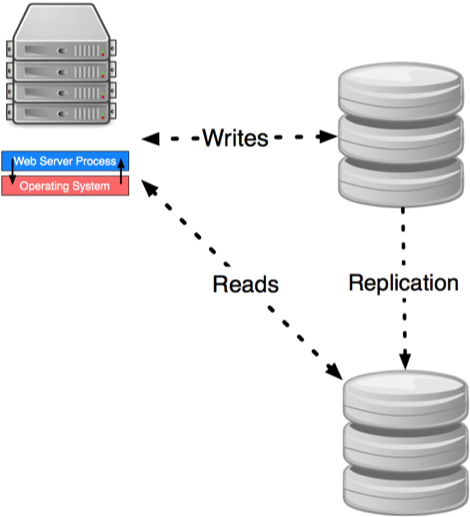

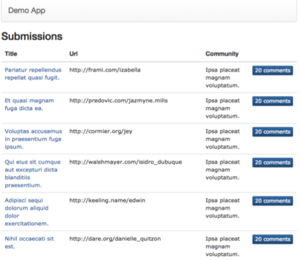

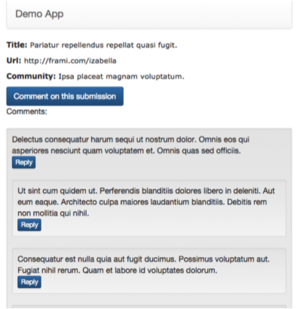

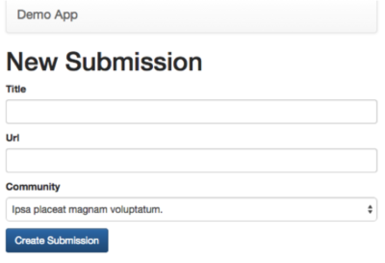

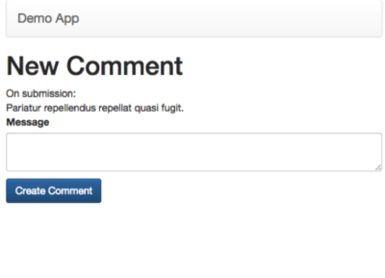

class: center, middle # RDBMS Scaling ## CS291A: Scalable Internet Services --- # Motivation You have your web application running on EC2. It is becoming increasingly popular and performance is degrading. > What do you do? --- # Handling Concurrent Requests .left-column40[ You have deployed application servers that can serve many concurrent requests. But as your site's popularity continues to grow that is not sufficient. > What do you do? ] .right-column60[  ] --- # Vertical Scaling .center[  ] You have increased your instance sizes and handled more load. However, as the popularity continues to grow you are no longer able to continue scaling vertically. > What do you do? --- # Horizontal Scaling .left-column30[ You have introduced a load balancer that distributes traffic across a pool of application servers. Nevertheless, as the traffic continues to increase, additional horizontal scaling of the application servers does not solve the problem. > What do you do? ] .right-column70[  ] --- # Caching You have properly configured HTTP caching such that unnecessary requests never happen. You are also caching heavyweight database operations. But it is still not enough to handle the increased load of your popular site. > What do you do? --- # SQL Structure and Query Optimization You have reduced the number of queries your application servers makes to the database. You have used `EXPLAIN` to discover indexes to add, and to ensure your most common SQL queries are as optimal as possible. However, your database is still a bottleneck. > What do you do? --- # Database Vertical Scaling > Is your database swapping (out of memory)? -- Scale up the amount of memory. > Is your database load too high? -- Scale up the CPU. > Are neither of those working? > What do you do? --- # Database Horizontal Scaling? With application servers we are able to scale horizontally by adding additional machines and introducing a load balancer to access them. > Can we do the same with the database?  --- # Non-trivial DB Horizontal Scaling > Can we horizontally scale the database by adding more database servers and > accessing them through a load balancer? Unfortunately, it's not that simple. Horizontal scaling works great for _stateless_ services. However, the database contains the state of our application, thus is not trivial to horizontally scale. Note: Horizontally scaling databases in this way works fine for read-only operations. --- # Problem: R(X), W(X), R(X)  --- # Techniques for Scaling Relational Databases Each of the following techniques partitions RDBMS (Relational Database Management System) in a different way: * Sharding * Service Oriented Architectures (SOA) * Separating Reads from Writes --- class: center inverse middle # Partitioning --- # Partitioning (Sharding): Idea Take a single database and __*split/partition/shard*__ it up into multiple smaller databases such that everything still works. -- > How do we handle joins across partitioned data? --- # Partitioning: Joins Any particular database join connects a small part of your database. However, transitively, database joins could connect everything together. ## E.g. Demo App * Any comment is only related to its parent (if not top-level), its children (replies), and its submission. * Submissions relate to each other through communities. * Transitively, all of these relationships could be joined across. --- # Partitioning: Separating Data .left-column40[ Find a separation of your data that ideally produces unrelated (not joined across) _partitions_. Once separated, your application cannot utilize the database to join across partitions. If you need to perform operations across sharded data, you will need to do it at the application level. Consider the performance trade-offs. Could you partition another way? ] .right-column60[  ] --- # Partitioning: Similar Data Partitioning involves splitting data of the same type (e.g., the rows of the tables). For instance if we wanted to split our `Comments` table into two partitions, we could store comments belonging to half the submissions in _partition1_, and those belonging to the other half in _partition2_. -- > What is not partitioning? -- Separating tables into their own databases is not partitioning. While this approach may work for some applications, the ability to join across tables is lost. --- # Finding the Data Assume we have partitioned the data for our application. > How can we find what partition our data is on? We need some sort of mapping to determine where to find that data. --- # Finding the Partition ## At the application server layer? > How would we implement this? ## At the load balancer? > How would we implement this? ## Across multiple load balancers? > How would we implement this? --- # At the App Server .left-column40[ Each application server contains a configuration that informs it of where each database is (IP address, DNS name) and how to map data to the database. The mapping can be arbitrarily complex. The mapping itself may even be stored in a database. ] .right-column60[  ] --- # At the load balancer The load balancer could be configured to route requests to the app servers that are configured to _talk_ to the right database. Such mappings are limited by knowledge that the load balancer can inspect: * Resource URI * Headers * Request Payload .center[] --- # Across Load Balancers .left-column40[ Host names (DNS) can be configured to point to the correct load balancer for a given request. Examples: * en.wikipedia.org vs. es.wikipedia.org (language based sharding) * google.com vs. google.co.uk (location based sharding) * na6.salesforce.com vs. naX.salesforce.com (customer based sharding) __Note__: The above examples could involve only a single load balancer. ] .right-column60[  ] --- # Finding Data: Trade-offs The approaches we just described are vary from providing more flexibility to providing more scalability. * App Server (most flexible) * Load Balancer * DNS (most scalable) --- # Partitioning and Growth Ideally the number of partitions increase as the usage of your application increases. Example: If each customer's data can be partitioned from the others, then doubling the number of customers doubles the number of partitions. --- # Email Example The data that represent one user's email conceptually requires no relation to the data representing other users' email. When a request arrives associated with a particular user, the server applies some mapping function to determine which database the user's data are located in. Should the email provider need to take down a database, they can relocate the partitioned data to another database, and update the mapping with little disruption. --- # Demo App Example * Users can create and view communities. * Users can create submissions in these communities. * Each submission has a tree of comments. > How can we partition this application? .center[  ] --- # Partitioning Demo App ## By User? This would have difficulty as logged in users will want to see communities, submissions, and comments made by other logged in users. -- ## By Submission? This would make generating submission lists for a single community difficult. -- ## By Community? Obtaining a community list will be more difficult, but cleanly partitioning comments and submissions by their community is doable. --- # Community Partitioning > How could we make community based paritioning work? We can use information in the url with any of the partitioning approaches. * http://ucsb.demo.com (community sub-domain) * http://demo.com/ucsb (community path) Either the application server connects to the right database for the `ucsb` community, or DNS/loadbalancer directs the request to an application server that always talks to the `ucsb` containing database. --- # Community Partitioning Success .left-column[  ] .right-column[  ] .center.clear[  ] --- # Community Partitioning Difficulty .left-column40[ * The global list of submissions. * List of submissions by user. * List of comments by user. > What can we do to resolve these issues? ] .right-column60[  ] --- # Solving Partitioning Problems * Modify the user interface such that the difficult to partition page does not exist. > Can you get by with only providing the list of communities? * Alternatively, periodically run an expensive background job to keep a semi-up-to-date global submission list aggregating results from across databases. --- # Partitioning in Rails Rails 6+ has built-in support for partitioning: https://guides.rubyonrails.org/active_record_multiple_databases.html ```ruby def index ... ActiveRecord::Base.connected_to(database: :customer1) ... end end ``` --- # Partitioning Trade-offs - Summary ## Strengths * If you genuinely have zero relations across partitions, this scaling path is very powerful. * Partitioning works best when partitions grow with usage. -- ## Weaknesses * Partitioning can inhibit feature development. That is your application may be perfectly partitionable today, but future features may change that. * Not easy to retroactively add partitioning to an existing application. * Transactions across partitions do not exist. * Consistent DB snapshots across partitions do not exist. --- class: center inverse middle # Service Oriented Architecture (SOA) and Microservices --- # Service Oriented Architecture _Partitioning_ splits data of the same type into separate, unrelated groups. _Service Oriented Architectures_ (SOA) do something different. They partition both the data and the code based on type and function. Like with partitioning, no joins can automatically be performed across these partitions. ??? * Parttion splits data sets of the same schema * SOA splits segments of the application based on function --- # SOA Stack The primary concept behind SOA is having many focused mini-applications (microservices). Each of these focused mini-applications is called a service. When a front-end application server needs data to satisfy a request, instead of speaking to a database, it will request data from the appropriate service. .center[] ??? * Front end application server talks to services * Services may talk to other services * Services may talk to databases * Front end application server may talk to databases --- # SOA Functions Each service is broken out by logical function. E.g.: * Users service that handles authentication and authorization * Billing service that handles credit cards and subscriptions * Account subsystem that tracks invoices .center[] ??? * Contrast this with sharding where the data is split by some criteria * Ex: SaaS application where Users, Billing and Acconts are sharded by customer * vs. SOA where Users, Billing and Accounts are separate services --- # SOA Communications With _partitioning_ or _sharding_ the application server often only talks to a single partition per request. With _SOA_ the front-end application server may communicate with many distinct services, and some of those services may talk to a handful of other services. .center[] --- # Benefits of SOA With SOA the deployment of services is decoupled. That means that each can be updated and scaled independently of the remainder of the system. This decoupling can provide isolated outages (billing is down for 5 minutes). Services lend themselves well to maintenance by a single development team thus minimizing conflicts between teams that would otherwise collectively work on a single monolithic application. .center[] ??? * A service can be unavailable and the rest of the system can continue to function. * A service can be scaled independently of the rest of the system. * A service can be developed and maintained by a single team. * A service can be written in a different language than the rest of the system. --- # SOA and the Demo App > How could we divide the Demo App into services? .center[] --- # SOA and the Demo App 1. Comments service can track comments and replies for each submission. 2. Submissions service can be responsible for all the links that are submitted. 3. Communities service can store the list of communities along with their creator. 4. Users service can manage the users in the system. --- # Demo App SOA Code Before ```ruby class CommunitiesController < ApplicationController def create if current_user.allowed_to_create_community? Community.create!(params) render :show and return end render :new, status: :unprocessable_entity end end ``` -- After ```ruby class CommunitiesController < ApplicationController def create user = UsersService.get_user_from_session(cookie) if user.allowed_to_create_community? CommunitiesService.create_submission!(params['title'], params['community'], user_id) render :show and return end render :new, status: :unprocessable_entity end end ``` --- # Modes of Dataflow - Via Databases - Via Service Calls (REST, GraphQL, gRPC, Thrift) - Via Async message passing (message brokers: RabbitMQ, ActiveMQ, Apache Kafka, Amazon SQS, Google Pub/Sub, etc.) [Source: Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems by Martin Kleppmann](https://dataintensive.net/) --- # SOA Trade-offs ## Strengths * Small encapsulated code-bases * Scales well as application size scales * Scales well as the number of teams scale -- ## Weaknesses * Transactions across services do not exist * Consistent DB snapshots across services do not exist * Application logic required to join data * Some service may grow disproportionately to others * sharding / partitioning might still be neccessary --- class: center inverse middle # Separating Reads from Writes --- # Database Operations  This graph shows significantly more _reads_ than _writes_. This may be the case for your application. ??? * More reads than writes --- # Database Horizontal Scaling .left-column[ In general, a relational database is hard to horizontally scale. However, when limited to a read-only copies, databases are very easy to horizontally scale. * Set up separate machines to act as __read replicas__ * Whenever any transaction commits to the _primary_ database, send a copy to each _replica_ and apply it ] .right-column[] ??? * Horizontal scaling is easy for read-only copies --- # Database Replication .left-column40[] .right-column60[ The sending of data from the primary to its replicas (replication) can happen either synchronously or asynchronously. ## Synchronous When a transaction is committed to mater, the primary sends the transaction to its replicas and waits until applied by all before completing. ## Asynchronous When a transaction is committed to the primary, the primary sends the transaction to its replicas but does not wait to see if the transaction is applied.] ??? * Wait until all replicas have applied the transaction * Wait until some replicas have applied the transaction * Don't wait for any replicas to apply the transaction --- # Database Replication Trade-offs .left-column[ > What are the advantages of waiting until writes are applied to all replicas? ] .right-column[] --- # Database Replication Trade-offs .left-column[ > What are the advantages of waiting until writes are applied to all replicas? Consistency. Subsequent read requests will see changes. > What are the disadvantages of waiting until writes are applied to all replicas? ] .right-column[] --- # Database Replication Trade-offs .left-column[ > What are the advantages of waiting until writes are applied to all replicas? Consistency. Subsequent read requests will see changes. > What are the disadvantages of waiting until writes are applied to all replicas? Performance. There may be many read replicas to apply changes to. ] .right-column[] --- # Database Replication Levels .left-column[] .right-column[ ## Statement-level Similar to streaming the journal from the primary to its replicas. ## Block-level Instead of sending the SQL statements to the replicas, send the consequences of those statements. > What are the advantages of each? ] --- # Database Statement-level Replication Statement-level is faster than block-level, with a catch. An SQL statement is generally more compact than its consequences. ```sql UPDATE txns SET amount=5; ``` The above query acts on all rows which may require a lot of data to transmit the consequences. However, SQL statements must now be deterministic: ```sql UPDATE txns SET amount=5, updated_at=NOW(); ``` > What is the value of `NOW()`? Such values must be communicated from the primary to its replicas. --- # PostgreSQL Replication > PostgreSQL streaming replication is asynchronous by default. If the primary > server crashes then some transactions that were committed may not have been > replicated to the standby server, causing data loss. The amount of data loss > is proportional to the replication delay at the time of failover. > When requesting synchronous replication, each commit of a write transaction > will wait until confirmation is received that the commit has been written to > the transaction log on disk of both the primary and standby server. The only > possibility that data can be lost is if both the primary and the standby > suffer crashes at the same time. This can provide a much higher level of > durability, though only if the sysadmin is cautious about the placement and > management of the two servers. Waiting for confirmation increases the user's > confidence that the changes will not be lost in the event of server crashes > but it also necessarily increases the response time for the requesting > transaction. The minimum wait time is the roundtrip time between primary to > standby. https://www.postgresql.org/docs/9.1/warm-standby.html#SYNCHRONOUS-REPLICATION ??? * What is the use case for replication Tradeoff between consistency and performance * High availability with zero data loss? * Synchronous replication * High availability with some data loss? * Asynchronous replication --- # Demo App and Read Replicas The following pages would be served from read replicas: .center[   ] --- # Demo App DB Primary Pages The controllers associated with the actions from following pages (create) would need to talk to the primary database. .center[ .left-column[] .right-column[] ] --- # Rails Read Replica Support Rails 6 has first-class support for read replicas now. "Automatic switching" must be explictly configured and enabled: > Automatic switching allows the application to switch from the primary to > replica or replica to primary based on the HTTP verb and whether there was a > recent write. -- > If the application is receiving a POST, PUT, DELETE, or PATCH request the > application will automatically write to the primary. For the specified time > after the write the application will read from the primary. For a GET or HEAD > request the application will read from the replica unless there was a recent > write. -- > Rails guarantees "read your own write" and will send your GET or HEAD request > to the primary if it's within the delay window. By default the delay is set > to 2 seconds. You should change this based on your database > infrastructure. Rails doesn't guarantee "read a recent write" for other users > within the delay window and will send GET and HEAD requests to the replicas > unless they wrote recently. --- # Trade-offs of Read Replicas ## Strengths For applications with a high read-to-write ratio: * the load on the primary database can be dramatically reduced. * read replicas can be horizontally scaled (even with a load balancer) -- ## Weaknesses Application developer needs to think about reads that affect writes vs. reads that do not affect writes as such dependent reads should occur in the same transaction as the write. --- # At AppFolio ## High usage of sharding * Each customer's data is stored in separate logical database. * Those logical databases can be moved between distinct physical database servers as needed. * DNS is used to route customer to their application server ## Medium use of SOA * Some functionality broken out into services, but the primary benefit is for scaling engineers rather than scaling load. ## Low use of Read vs. Write distinction * We utilize read replicas for backup and offline analysis. --- # Summary Horizontal scaling of relational databases is hard. There is no silver bullet, but by combining sharding, SOA, and read replicas you can get very far. For applications that need to scale writes beyond what RDBMS can offer, you need non-relational databases.